AWS Lambda and ELK

I’ve been using the ELK stack for over three years. It’s a tool that is used daily at work, so it’s little surprise that when In-Touch Insight Systems went down the AWS Lambda road for one of our newest projects, I wasn’t happy using the default CloudWatch Logs UI.

|

By default, Lambda ships its logs to CloudWatch Logs. This is great, you can easily see what’s going on during development and little work is needed to get up and running. There are a few methods we could have used to get logs to our ELK stack:

How to get Logs to ELK

Sure, you could ship logs directly from your Lambda function to Redis for Logstash to pick up, or even directly to Elasticsearch.

We opted not to go with this method for two reasons: this is against the Lambda philosophy of keeping the function small and fast; but also because the CloudWatch Logs generated by a Lambda function include this great line:

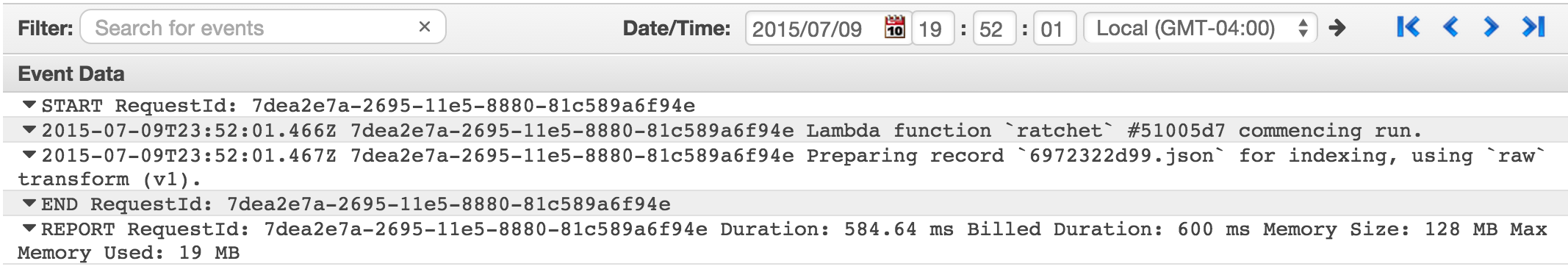

REPORT RequestId: 7dea2e7a-2695-11e5-8880-81c589a6f94e Duration: 584.64 ms Billed Duration: 600 ms Memory Size: 128 MB Max Memory Used: 19 MBThere’s no way you can get information this accurate by running your own timers and profilers on your function. We wanted to get it!

logstash-input-cloudwatch-logs

I’ve just released the first beta version of logstash-input-cloudwatch-logs. Initial design has focused heavily on the Lambda -> CloudWatch Logs -> ELK use case.

Update 2017-08-04: A stable release is now available.

How it works

You specify a Log Group to stream from and this input plugin will find and consume all Log Streams within. The plugin

will poll and look for streams with new records, and consume only the newest records.

Update 2017-08-04: You may want to see the plugin documentation itself. The 1.0 release introduced more configuration options, including the ability to poll multiple log groups with a single input, or poll by a known prefix.

Try it yourself

Download the latest release of the plugin.Install by runningbin/plugin install <download path>/logstash-input-cloudwatch_logs-0.9.0.gem- Install the plugin from rubygems:

bin/logstash-plugin install logstash-input-cloudwatch_logs

For further information, see the Logstash Documentation on Installing Plugins

Sample config

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

input {

cloudwatch_logs {

log_group => "/aws/lambda/my-lambda"

access_key_id => "AKIAXXXXXX"

secret_access_key => "SECRET"

type => "lambda"

}

}

filter {

grok {

match => { "message" => "%{TIMESTAMP_ISO8601}\t%{UUID:[lambda][request_id]}\t%{GREEDYDATA:message}" }

overwrite => [ "message" ]

tag_on_failure => []

}

grok {

match => { "message" => "(?:START|END) RequestId: %{UUID:[lambda][request_id]}" }

tag_on_failure => []

}

grok {

match => { "message" => "REPORT RequestId: %{UUID:[lambda][request_id]}\tDuration: %{BASE16FLOAT:[lambda][duration]} ms\tBilled Duration: %{BASE16FLOAT:[lambda][billed_duration]} ms \tMemory Size: %{BASE10NUM:[lambda][memory_size]} MB\tMax Memory Used: %{BASE10NUM:[lambda][memory_used]} MB" }

tag_on_failure => []

}

mutate {

convert => {

"[lambda][duration]" => "integer"

"[lambda][billed_duration]" => "integer"

"[lambda][memory_size]" => "integer"

"[lambda][memory_used]" => "integer"

}

}

}

output {

stdout { codec => rubydebug }

}

We install three grok filters on our log entries. First we match the generic log message, which is what appears in the

stream if you console.log something. We strip the timestamp, and pull out the [lambda][request_id] field for indexing.

The second grok filter handles the START and END log messages.

The third grok filter handles the precious REPORT message type and gives us the following wonderful fields:

[lambda][duration]

[lambda][billed_duration]

[lambda][memory_size]

[lambda][memory_used]As we prepare to enter production on this new set of services, this should give us the visibility we need to make decisions about how to properly size our Lambda functions, and also analyze any errors we encounter!

Feedback

How has this worked for you? Found a problem? Let me know and open an issue!